Sean Clark's Blog

This weekend saw the first Creator Fair at the National Space Centre in Leicester. A diverse collection of "makers" turned up to show their work from a wide-range of disciplines - including crafts, 3D printing, electronics, mechanics, toy making and more. I was there to show off some of the work we do at Interact Labs and to encourage people to think about how technology can be used in the arts.

There was a steady stream of visitors on both days - especially in the afternoons. This included children of all ages and lots of enthusiastic parents

I was giving away 3D printed Minecraft swords, which went down very well with the young people. I must have produced at least 25 of them over the weekend!

There's talk of the event running again next year - which would be a great idea. You can see my pictures of the event here on Flickr.

It's has been said that a 'digital artwork' exists independently from the computer and display technology used to present it. Indeed, it is often argued that a key feature of digital art is that it is infinitely reproducible and that there is no such thing as an 'original'.

While I agree with this in some ways, I increasingly find myself caring about the aesthetics of the technology used to 'show' my work. In fact, I no longer really see the creative idea, computer program and technology as separate things. They are all part of the artwork and I now strive maintain full control over the equipment used in my exhibitions (no longer relying on pieces of kit supplied by a gallery).

This can have practical implications when producing new work. Since I no longer show my work on third party computers and screens, each new digital artwork I produce needs to have a dedicated screen and computer, plus custom frames and mounts. A collection of ten artworks means ten times this.

This was proving to be problematic - especially since given my preferred platform of a Mac mini computer and HD screen. The equipment to display an artwork was costing up to £1000 and was not reusable without destroying the artwork after the exhibition.

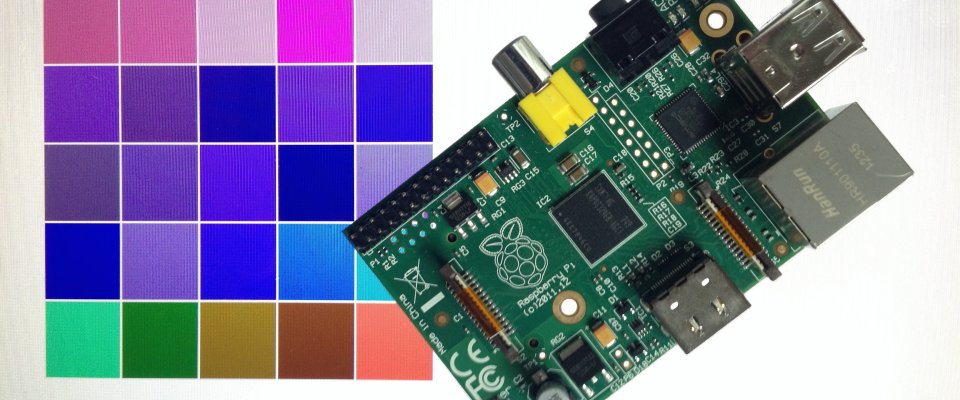

However, the growth in low cost computers and compact HD screens is changing this. It is now possible to purchase an Android or Linux computer such as a Raspberry Pi or PCduino for around £30 and a 'component' HD display (intended for a tablet) for £70. Add a laser cut or 3D printed enclosure and you have a personalised, unbranded computer system, complete with computer and screen for well under £200.

These little computers are not super fast, but they are certainly powerful enough to run the artworks I am creating at the moment. Plus there are plenty of low-cost screens to choose from to match the artwork being created. I found a great source of 1280x720 pixel 10" screens on eBay recently.

A lot of my new work is created in JavaScript and intended to run under the Chrome web browser on Android. However, I've now started to create more work using the Processing language. This is able to produce Linux-compatible Java executable code that runs on the Raspberry Pi (thanks to Paul Brown for pointing me in this direction). These technologies are also inherently Internet aware - which is a key part of my 'connected artworks'.

I'll be posting some images of the 'digital art objects' I've been creating with this technology shortly. The first public display of them will be at the Kinetica Art Fair in October.

Interesting things are happening with Virtual Reality again. While I'm still not sure that it will be more than a niche technology in the long run (I think 'augmented' is more interesting than 'virtual'), it is quite fun to see something I was experimenting with over 20 years ago back in the spotlight.

Two approaches are popular at the moment. One is the classic VR 'goggles' system, like the Oculus Rift, in which a special stereo display and head tracker is worn by the user and driven by a computer. The other is to take advantage of the high-quality display, position tracker and computer many of us already have in our pockets - in the form of a smartphone - and simply provide a housing and lenses to enable it to be used as a 3D display. The best known version of the latter approach is the Google Cardboard - literally a cardboard holder with plastic lenses and a control switch.

You might think that the dedicated VR goggles would have significant advantages over the smartphone approach. This is true to an extent, but the power and quality of the modern smartphones is such that the difference is actually much less than expected.

I have been experimenting with various Google Cardboard designs and have managed to source lenses for as little as £2.50 a pair and whole kits off of eBay for £6 (or a bit more from here). On the whole they all work pretty well, but can be a little flimsy.

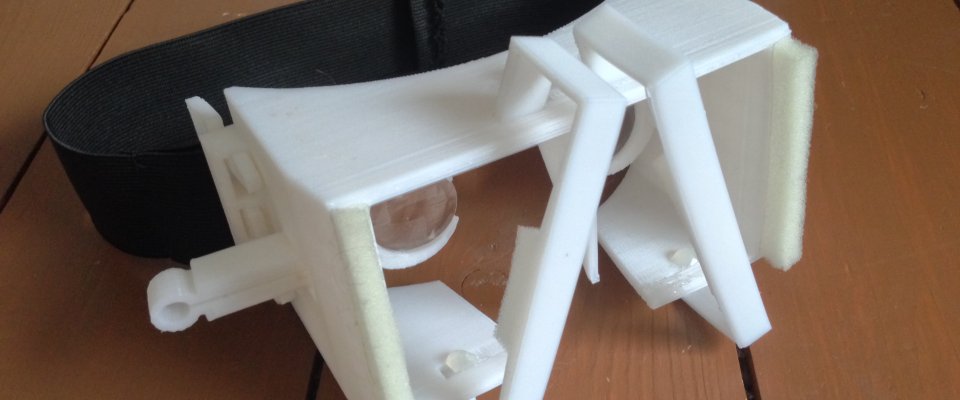

To find something more rohbust I have been looking at plastic and 3D printed alternatives. My favourite so far is the OpenDive by Durovis. This is a 3D-printable version of the commercial Durovis Dive product. This is a smartphone VR housing that actually predates the Google Cardboard.

While it doesn't have the magnetic switch of the Google Cardboard (although one can be easily added), it does the same basic job - that is hold your smartphone in front of some lenses. It also 3D prints pretty well - albeit taking almost 5 hours to produce on my Replicator. What's more, even with the lenses and an elastic head strap the total cost of the project is less than £5.

Of course, hardware is only one part of the system. Durovis also provides a free Unity plug-in that does head tracking using your phone's position tracker and renders your 3D model as two side by side views for stereo vision. This allows you to use the Unity game engine (a free version is available) to create interactive 3D worlds and compile them for Android and iOS.

This results in a complete 'home-brew' VR system that you can experiment with for the cost of a large latte or two at Starbucks! What's more, the Unity environment is also compatible with the Oculus Rift (although you have to upgrade to the Pro version) so the new skills you develop will be transferable to this VR environment.

Field Broadcast is an innovative arts platform that connects artists, audiences and obscure locations through live video broadcasts. It's a really successful project that has used a Windows and Mac app for the last few years to alert people when a broadcast is starting and then deliver the video stream to their desktop.

For the latest series of broadcasts Cuttlefish was asked to create a mobile app for Android and iOS that would allow mobile users to receive alerts and broadcasts on their smartphones. The Android app went live a couple of weeks ago, and the iOS app was finally approved by Apple this weekend.

I've been using the app myself to tune in to the recent broadcasts and have to say I'm hooked. When the broadcast alert arrives you never quite know what you are going to see. It could, literally, be a transmission from a field, or - as it the case with some of the current broadcasts - from a riverside, and even from a row boat.

I've taken a few screen grabs of the recent broadcasts that you can find on my Flickr here. To see the next live broadcast yourself you can download the desktop of mobile apps from www.fieldbroadcast.org. All downloads are free.

Last night was the private view of the Automatic Art exhibition at the GV Art Gallery in Marylebone, London. The show was curated by Ernest Edmonds and presents 50 years of British art that is generated from strict procedures.

The artwork on display ranged from constructivist sculptural forms, through systems-based paintings and drawings, to computer-based and interactive artworks. It was put together in a very coherent way, with background materials and supporting information, and made full use of the multi-level and multi-room gallery space.

The private view was very well attended, with many of the artists involved in the show in attendance. This provided an opportunity for me to catch up with quite a few friends and colleagues from over the years. These included people from LUTCHI (the research centre at Loughborough University where I began my graduate career in 1989), William Latham (who designed cover art for The Shamen in the 1990s, and whose first website I built), friends associated with the Computer Arts Society and present-day colleagues from the IOCT at De Montfort University. It ended up being a very enjoyable night - just a pity I had to get a train back to Loughborough at 10pm!

The exhibition is open to the public from today (Friday 4 July) and ends on Saturday 26 July 2014. Entrance is free. My pictures from the set-up and opening can be found here on Flickr.

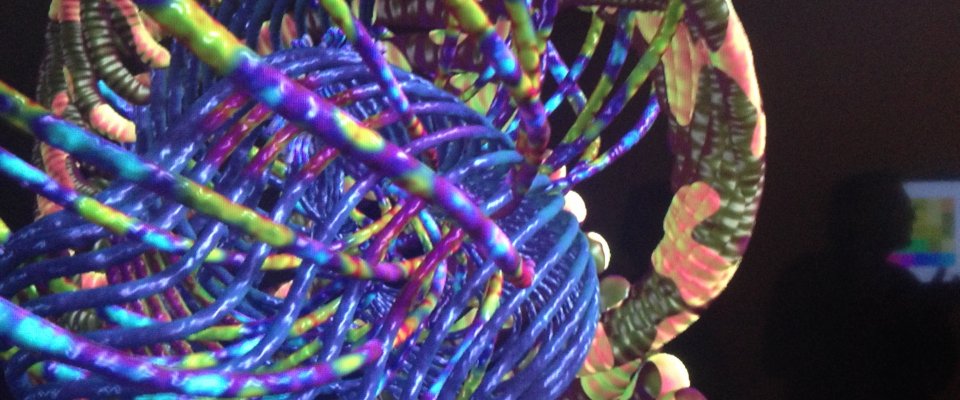

The full list of artists featured in the exhibition is Stephen Bell, boredomresearch, Dominic Boreham, Paul Brown, John Carter, Harold Cohen, Nathan Cohen, Trevor Clarke, Ernest Edmonds, Julie Freeman, Anthony Hill, Malcolm Hughes, Michael Kidner, William Latham (picture attached), Peter Lowe, Kenneth Martin, Terry Pope, Stephen Scrivener, Steve Sproates, Jeffrey Steele, Susan Tebby and myself.

We've been doing a fair amount of work with 3D printing at Interact Labs over the past few months. More recently we have started to get in to 3D scanning to help us create models for printing. One of the easiest 3D scanning technologies to work with is 123D Catch from Autodesk. This software enables you to "scan" an object by simply taking photographs of it from multiple angles. Once you've taken your pictures you upload them to the Autodesk server, where they are processed and a 3D model is produced. The output is normally fine for 3D printing (and the service is free for non-commercial use).

We wondered how these models might look if, rather than being printed, they were converted to a format suitable for viewing in our Oculus Rift Virtual Reality headset. Using a free 3D modelling program called OpenSpace3D we've been doing just that and the results have been impressive.

We've been scanning a combination of building exteriors and small and large objects and have been combining them to produce little "worlds" that can be viewed on the Oculus Rift. While they are not quite as "real" as the real things, they look good and 123D Catch manages to capture the visual detail, as well as getting the shapes of the objects pretty much spot on.

Check out some pictures of this work-in-progress here on Flickr. The stars of this particular set of images are "Sockman", a public artwork in the centre of Loughborough, and a swan statue from Loughborough park.

I've been doing some work at Interact Labs recently with pioneering UK digital artist Paul Brown. Paul has been creating computer-based artworks since the late 1960s and was looking for some support in recreating a couple of early artworks for an exhibition.

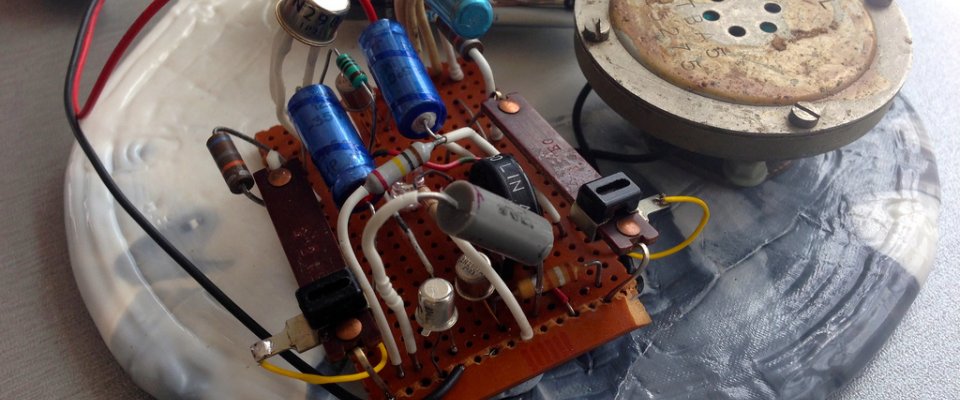

The first of these was an electronic piece that involved sequencing lights and a tone generator that Paul first showed in the 1970s. While the electronic components needed to remake the piece are still available, modern components don't have the "aesthetic" appeal of the originals. Local electronics expert Tony Abbey was brought in to help and was able to source some lovely old transistors, resistors and capacitors that enabled him to recreate a great-looking new prototype of the artwork. The only real difference from the original is the use of LEDs rather than the old filament bulbs of the time. Paul will now be constructing a further ten versions himself.

The second was a computer-based artwork that originally ran on an early 'framestore' computer. Paul had re-coded the work in the Processing language. Processing allows you to save your program as a Linux compatible version and Paul wondered if it might be possible to run the artwork on a Raspberry Pi. As well as having the the ability to run the program, the Raspberry Pi has a composite video output that Paul hoped would look good on some early video monitors he had acquired. With a bit of configuring this worked like a dream. An artwork that once ran on a computer the size of a car is now running on one the size of a pack of cards!

Addressing the problem of how to preserve early digital artworks is going to be of increasing importance in the coming years. Technology is constantly changing and older technology becomes 'redundant' surprisingly quickly. Even I am finding that the only way to keep my artworks from the late 1990s running is to keep a stock of old computers running 1990s operating systems.

Some pictures of the work with Paul can be found on the Interact Labs photo stream. For more information about Paul Brown's work visit http://brown-and-son.com or http://www.paul-brown.com.

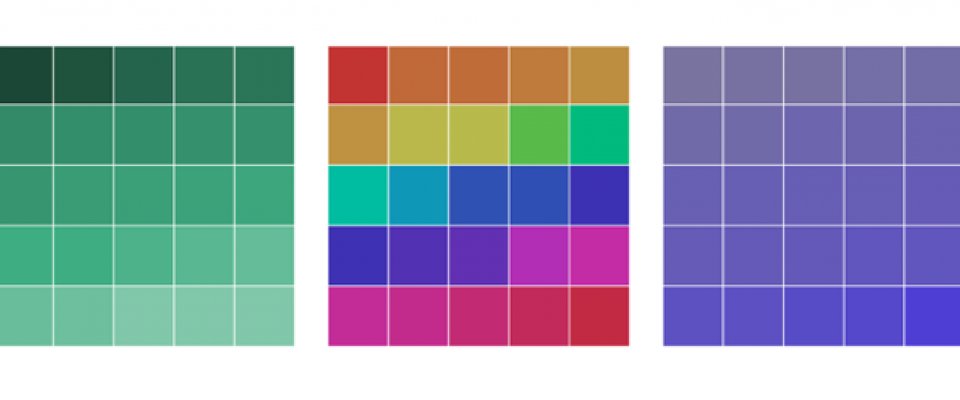

I'm just back from Cardiff setting up an artwork as part of Genetic Moo's latest Microworld event at Arcadecardiff in the Queens Arcade. The piece forms part of a collection of artworks that interact with each other as well as the visitors to the gallery. My piece is a tryptych of self-organising grids that swap colours with each other as well as incorporating new colours that is 'sees' in the gallery. The work develops over time, subtly reflecting the history of interactions in the space.

Other pieces on display include a collection of Genetic Moo's responsive, highly organic, images and systems; a piece by roboticist Sean Olsen; and works by interactive/digital artists Myles Leadbeatter, Banfield & Rees, Matthew Britten and others. The show is open to the public from the 26th May until 1st June 12pm until 6pm.

See the Facebook event page for detail of the special events that will be on during the week. I've uploaded an album of pictures from the show to Flickr.

I have a number of exhibitions coming up and will be showing some new works that form part of my new major project. One of the important outcomes of my research over the past few years has been to define what I have come to call "Interconnected Digital Art Systems", or "Digital Art Ecologies. This involves a configuration of digital artworks that are designed to interactive with each other as well as their audience. You will have see this idea developing through my Interact Gallery exhibitions, my Symbiotic exhibition with Generic Moo towards the end of 2012 and my work on ColourNet with Ernest Edmonds in 2013.

My new work takes this idea further by making all of my artworks part of an interconnect system. I expect almost all artworks I produce over the next few years (at least) to be connected to each other - be it via exchange of light and sound through a physical space, or the exchange of data over the Internet. I have quite a few ideas how to realise this and will be starting with a new piece at Genetic Moo's Microworld exhibition in Cardiff next week, followed by a related piece at the Automatic Art exhibition at the GV Art Gallery in London in July.

For these two works I will be continuing with the grid of colours theme used in ColourNet, but plan to introduce new structural forms for a planned exhibition at the Kinetica Art Fair in London in October.

Remember, everything is connected!

In 2012 the Interact Gallery hosted a series of rehearsals and and performance for Ximena Alarcon's "Network Migrations" project. The project involved a joint vocal performance between two groups of people linked via the Internet, one group in Leicester and the other group in Mexico City - a distance of around 5,500 miles. Ximena's research paper about the project has now been published in "Liminalities: a Journal of Performance Studies". You can read the paper and find videos and audio from the project here. My documentation of the event can be found here on the archived Interact Gallery site.